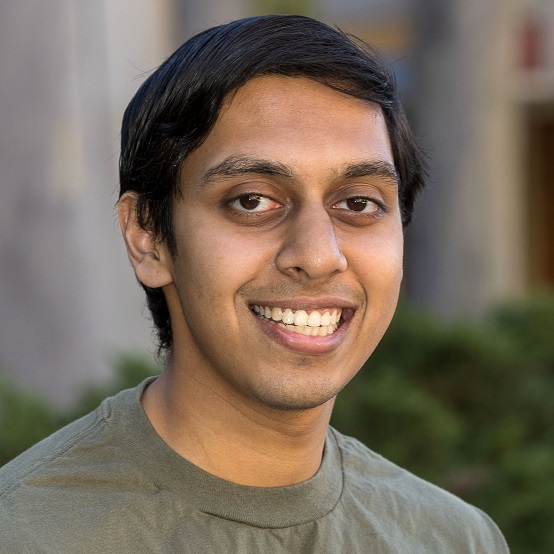

I am a quantitative researcher at G-Research. Before this, I earned my PhD in mathematics from UCLA, where I was fortunate to be advised by Guido Montúfar. My PhD research was on the mathematical theory of neural networks, and in particular understanding optimization and generalization under moderate-dimensional scaling of parameterization, dataset dimensionality, and sample size.